Emotion to Typography | Interactive Art |

Emotion to Typography | Interactive Art |

Aim: To create an interactive ETT (Emotion-to-Typography) model which generates typography based on the user's emotions and text input.

Process: Literature review, dataset creation, ETT model creation, user testing

Tools: OpenCV, Python, Google Forms

Motivation

Multisensory design, an interdisciplinary approach in HCI, aims to deliver utility, artistic challenge, and entertainment. Inspired by other relevant research in this domain, we propose a multisensory experience that enables users to better convey their emotions through words by composing typography using emotion detection.

Our interest in multisensory experiences is driven by revelations of utility, artistic challenge, and entertainment surrounding the engagement of multiple senses at a time.

Limited research exists in the field of dynamic typography and we take note of how we can introduce facial emotion recognition to enhance the novelty of our project thus assembling a model to depict the emotional connotations of typography as we know of today.

Literature Review

Wong’s research in 1996 proposes “temporal typography” to illustrate the expressive and dynamic power of typography and how it can be utilized to convey emotions and tones of voice. The research offers alternative approaches and applications to traditional text messaging and provides a crucial insight into how expressive and creative typography has been a relatively untapped domain in computing and communication over the past few decades.

We cannot talk about typography as artwork without addressing kinetic typography. Lee et. al’s work recognizes the quality gap that lies in text communication and how by enhancing the text’s expressive properties, one can convey richer emotions. The research shows how the design of 24 kinetic examples conveying 4 emotions has successfully conveyed a specific emotion intent.

However, there isn't sufficient research with regards to using facial emotion recognition to translate user emotions into artwork typography. Our review of existing research informs us about the different systems developed with a similar hypothesis, which we have used to construct and iterate upon a research question.

We aim to create a typography-emotion dataset and a multi-sensory model which receives a user’s emotion as an input using facial recognition, and further predicts a typographical artwork that is said to ‘convey’ the emotion. Our ETT (Emotion-to-Typography) model’s novelty lies in utilizing the interdisciplinary research approach to depict the emotional connotations of typography as we know of today.

Building a Dataset

We deconstructed typography into two main elements - color, and font, and constructed a large enough dataset to map typography to emotions.

Colour-Emotion

Taken from existing research “A machine learning approach to quantify the specificity of colour–emotion associations and their cultural differences”. (Wong, Y. Y. (1996))

Participants were asked to rate the intensity of the association between 20 emotions and a given colour on a 6 point rating scale. Authors used a classifier (SVM) to predict the colour evaluated on a given trial on the basis of the 20 ratings of colour–emotion association. Across the 10 cross-validations, the area under the ROC curve (AUC) was 0.830 (38.7% correctly classified instances).

Font-Emotion

We sent out a survey to 25 participants in the age range of 18-55. The phrase ‘Today is a Wednesday!” was provided in a different font. Sentence was chosen for its neutrality and objectiveness so as to not introduce bias. Participants were required to select the emotions they felt when reading the text in each font, out of the 6 basic emotions developed by Paul Ekman. 20 questions with 20 individual fonts.

Typography-Emotion

To compile the typography-emotion dataset, we did an analysis of our font-emotion and color-emotion datasets. Fonts and colors were mapped to each other based on common emotions from these datasets. Through this, we create a form of typography with 2 variables- namely, font, and color. We then mapped each typography to its respective emotion to create a typography-emotion dataset.

Building the ETT Model

Facial Emotion Recognition

We built an image-based face detector and emotion classifier using CNN and OpenCV. This model is trained using the FER2013 dataset, which is a large, publicly available dataset consisting of 35,887 face crops. The images in the dataset vary significantly in terms of gender, age, lighting, pose, and other factors. Thus, it reflects realistic conditions and is an ideal dataset to use for our model. The dataset is split into training, validation, and test samples. All images are grayscale and have a resolution of 48x48 pixels. The following basic expression labels are provided for all samples:

Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral

We have 7 layers in our CNN model. In the four initial phases, we extract features and obtain a verbose feature map. Using this 512 vector space feature map, fully connected layers(Dense layers) can be used.

We receive the following figures in our model evaluation:

Training loss: 0.83, Training accuracy: 0.69

Testing loss: 0.95, Testing accuracy: 0.54

Emotion-To-Typography

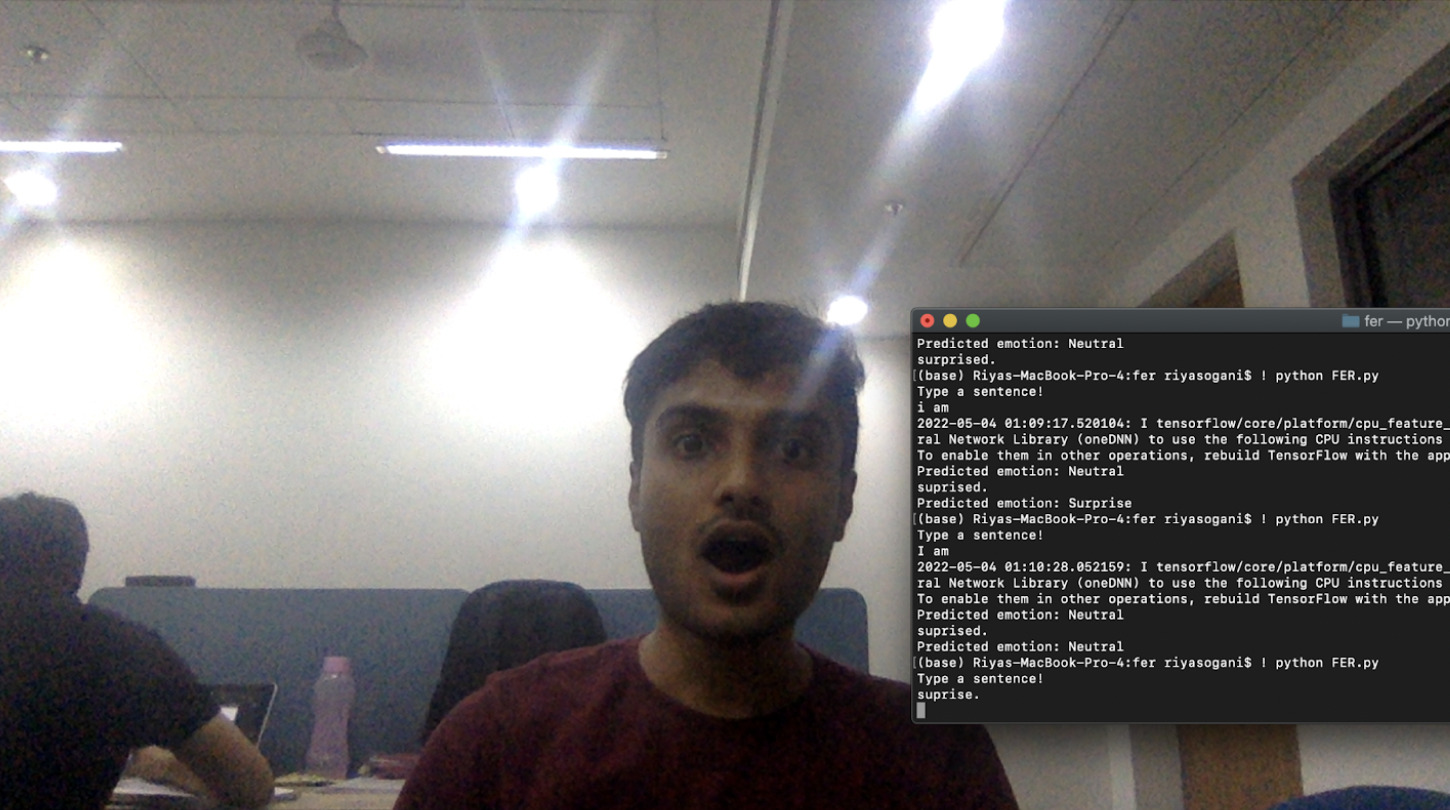

I wrote code that takes a live feed from the user’s webcam, and is able to classify each frame as an emotion using the Facial Emotion recognition model.

Thus, the ETT (Emotion-to-Typography) model receives 2 modes of user input - inputted text, and live webcam feed. The user's emotions are detected through the live webcam feed as they input the text, and the text is converted to typography based on the user's emotions.

This conversion from text to typography is done based on the collated emotion-color and emotion-font dataset explained above.

User Testing

We subjected this model to user evaluation by 4 participants. Users were given 5 minutes to play along with the tool. They were then asked to choose a sentence of their liking, and act the emotions out while typing the sentence. They were able to generate sentences with appropriate typography.

We conducted a brief post-session interview with each participant, asking them about their experience and what they imagined the utility of such a model to be.

Participant 3 said that he felt as though he was ‘typing like Geronimo Stilton’!

Use Cases and Applications

Art installations/Virtual graffiti

Our ETT model can be demonstrated as an art installation or virtual graffiti generator wherein users can showcase a series of emotions and have the model visualize their emotions in the form of typography.

The relevance and novelty of the installation lie in being able to create typographic artworks that connote user emotions, thus sparking conversation around the domain of creative computing and its other applications.

Text-messaging application

Research (Lee et al., 2006) has shown that kinetic typography in text messaging platforms is better at conveying emotional intent than regular typography. Applying our ETT model to a text-messaging platform would create a scenario where the user's camera application can capture their emotion and modify their text messages into expressive typography.

Even with the advent of emojis, Bitmoji, Animoji etc., there is still a lack of exploration in typography and how expressive typography can lend itself to a possibly better and more innovative user experience.